Today I’ve been kicking around the ICT office with Alex, figuring out how to make Jenkins (our wonderful CI server) build and publish the latest version of the CWD with all the bells and whistles like compilation of CSS using LESS, minification, validation of code and so-on. As part of this we managed to fix a couple of bits and pieces which had been bugging me for a while, namely the fact that GitHub commit notifications weren’t working properly (fixed by changing the repository URI in the configuration) and the fact that Campfire integration wasn’t working (fixed by hitting it repeatedly with a hammer).

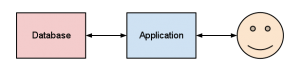

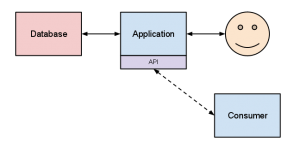

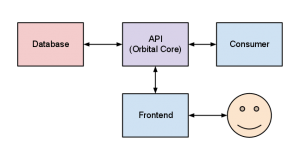

This brought me to thinking about how our various things tie in together, so I set about charting a few of them up. After a while I realised the chart had basically expanded into a complete flowchart of the various tools and processes that hang together to keep the code flowing in a steady stream from my brain – via my fingers – into an actual deployment on the development server. Since it may be of interest to some of you, here’s a pretty picture:

This is (approximately) the toolchain I currently use for Orbital, including rough details of what is being passed around

The beauty of this is that the vast majority of the lines happen completely by themselves — I get to spend my days living in the small bubble of my local development server and dipping in and out of Pivotal Tracker to update stories. The rest is magically happening as I work, and the constant feedback through all our monitoring and planning systems (take a look at SplendidBacon for an epic high-level overview) means that the rest of the project team and any project clients can see what’s going on at any time.