With the Orbital project at its end, and plans for a University research information / research data service afoot, I’m reviewing the excellent work carried out by our (now-departed) developers Harry Newton and Nick Jackson – work which linked up CKAN, the Orbital ‘bridge’ application, and the Lincoln Repository (EPrints) using SWORD – described in earlier blog posts here and here.

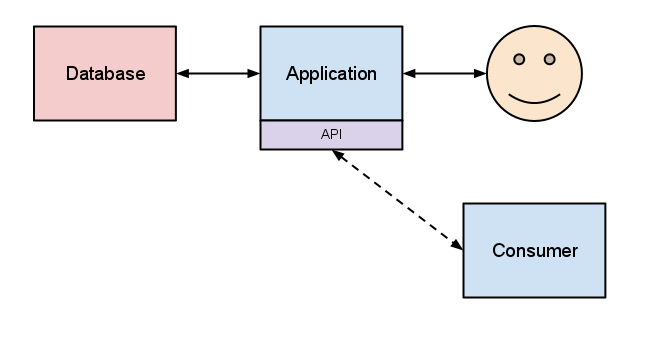

“One important piece of work that we’re undertaking at the moment in Orbital is the facility to deposit the existence of a dataset, from CKAN and the University’s new Awards Management System (AMS), into our (EPrints) Repository via SWORD – at the same time requesting a DOI for the dataset via theDataCite API. The software at the centre of this operation is what we refer to as Orbital Bridge.”

This deposit workflow is now broadly working as it should – I think only a few tweaks would be necessary now to turn this into a working tool for the University of Lincoln.

Most urgent is the need for the University to sign up with the DataCite DOI service, which would secure a DOI for each dataset record deposited from CKAN and hence formally published by the University. This subscription should form part of the new research information service.

The underlying code could be used for other SWORD-enabled deposit from sources of metadata (e.g. the Library’s discovery system, Find it at Lincoln), to the Lincoln Repository as the University’s bibliographic ‘system of record’.

Warning: this is an extremely screenshot-heavy blog post! Click on any one of the screenshots below to view a larger image.

Here’s a step-by-step walkthrough of the entire process of adding a dataset to CKAN, and depositing it as a record in the Lincoln Repository.

- Go to the Researcher Dashboard at: https://orbital.lincoln.ac.uk/ and click on “Sign In”.

- Enter your staff accountID and password to sign in to the Researcher Dashboard.

- Once you have been signed in and returned to the Researcher Dashboard, click on your name (in the top right-hand corner) and then click on “My Projects”.

- You will see an overview of your research projects – both funded projects (derived from the AMS), and unfunded projects you have added locally. Click on the name of the project you want to add data to.

- You will be taken to a page for that research project. On the right-hand side of this page, under the heading “Options”, click on “Create Research Data Environment”.

- You will be taken to the University’s CKAN research data platform, where a page/group will have been created which corresponds to your project in the Researcher Dashboard. Sign in to CKAN using your staff accountID (there is currently no single sign-on between the Researcher Dashboard and CKAN) and password and you should be returned to the same page. However you will probably be sent instead to the CKAN home page, in which case you will have to look again for your project under the “Groups” menu.

- Toward the top of the project screen in CKAN, click on “Add Dataset” > “New Dataset…”.

- Fill in the form with information about the overall dataset, including the following fields:

- Title

- URL

- License (N.B. US spelling!)

- Description

- Then click on “Add Dataset”

- If you now click on “Further information” tab on the left-hand menu, you can add the following additional information about the dataset (this is not obvious from the initial dataset form):

- To attach individual data document(s)—which CKAN refers to as “resources”—to the dataset, scroll down the page and click on “Upload a file” (there are other options) > “Choose file” > “Upload”.

- Then fill in the form with the following basic information about the “resource”:

- Name

- Description

- Format

- Resource Type

- Datastore enabled (ticked by default)

- Mimetype

- Mimetype (Inner)

- “Extra Fields” (user-defined, or used by Orbital)

- To deposit a record for this dataset in the Lincoln Repository, go back to the Orbital Researcher Dashboard at: https://orbital.lincoln.ac.uk/ and navigate to your project. Toward the bottom left of the page you should now see a table containing the dataset(s) you have created in CKAN for this project. Choose which dataset you want to deposit, and hit the “Publish to Lincoln Repository” button.

- The Researcher Dashboard will then display a deposit form containing the following fields (some of which should be being autopopulated from CKAN fields but which do not appear to be):

- Title

- Description

- Type of Data

- Keywords

- Subjects

- Divisions

- Metadata visibility [Show|Hide]

- People

“Publishing will publicly announce the existence of your dataset on the Lincoln Repository, as well as start the process of long-term preservation of your data.“Usually you should only publish a dataset either at the end of a research project, or if the data is being cited in a paper. Publishing a dataset will place some restrictions on the changes you can make to the dataset in the future, such as removing your ability to delete the data. It will also generate a DOI, which allows your dataset to be uniquely identified and located using a simple identifier.“Please check the information in this form and make any necessary changes, as this is the information which will be entered into the published record of the dataset.“If you have any questions about this process please contact a member of the research services team for advice or assistance.”

“Publishing will publicly announce the existence of your dataset on the Lincoln Repository, as well as start the process of long-term preservation of your data.“Usually you should only publish a dataset either at the end of a research project, or if the data is being cited in a paper. Publishing a dataset will place some restrictions on the changes you can make to the dataset in the future, such as removing your ability to delete the data. It will also generate a DOI, which allows your dataset to be uniquely identified and located using a simple identifier.“Please check the information in this form and make any necessary changes, as this is the information which will be entered into the published record of the dataset.“If you have any questions about this process please contact a member of the research services team for advice or assistance.”

- When you hit the “Publish Dataset” button, the dataset record from CKAN will be used to create a record in the Lincoln Repository. The record will be submitted for review by the Repository team, who will then make it live. N.B. for the time being, you will see an error “Validation errors: [doi] is a required string” – this happens because the University does not currently have access to the live DataCite DOI service, which would secure a DOI for each dataset record deposited from CKAN. This should form part of the new research information service.

- Here’s an example of a record in the Lincoln Repository, created from a CKAN dataset and made live by the Repository team.

Problems with the deposit process as it currently stands:

- Permissions are not correctly cascaded from a project the Researcher Dashboard to a group in CKAN.

- There is currently no single sign-on between the Researcher Dashboard and CKAN.

- When CKAN challenges a user to log in to a group, they should be redirected back to the group page after logging in – instead they get sent back to the CKAN home page, in which case they will have to look again for their project under the “Groups” menu.

- A minor one – in CKAN “License” (noun) appears in US spelling (should be “Licence”).

- In order to add all the information needed to deposit a dataset from CKAN, user has to click “Further information” tab on the left-hand menu (this is not obvious from the initial dataset form).

- Some of the field labels in CKAN are a bit opaque or use technical terms (“Mimetype”) which could do with explanation.

- When depositing to EPrints, some of the deposit fields should be being autopopulated from CKAN fields – this does not appear to be happening. The fields affected are:

- “Description” (could be derived from CKAN dataset/resource Description fields)

- “Type of Data” (could be derived from CKAN resource Format field)

- Repository records created from CKAN have the data “Creator” attached, but not the “Maintainer”.

- Repository records created from CKAN don’t have a link back to the CKAN dataset (should go in the EPrints “Official URL” field) – this will be required to provide access to the data.

- After deposit, users see the error message “Validation errors: [doi] is a required string” – the University does not currently have access to the live DataCite DOI service, which would secure a DOI for each dataset record deposited from CKAN.