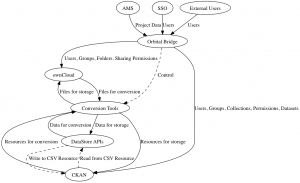

It’s been a while since I gave you an update on the technical side of Orbital, so here’s a lightning-fast overview of what’s going on.

CKAN

We’re still working on fine-tuning CKAN for our needs. Although we’ve made advances in the fields of theming, datastore, HTTPS and a few other tweaks we’re still plagued by mixed HTTP/HTTPS resources, plugins which are difficult to install, broken sign-in using our OAuth 2 SSO service, a broken search and a complete unwillingness of the Recline preview to work. I suspect a lot of this is down to unfamiliarity with the codebase and with Python in general, although some areas of CKAN do feel like they’re a collection of hacks built on top of some more hacks built on a framework which is built on another framework which is built on a collection of libraries which is built on a hack.

In short, CKAN is still in need of a lot of work before our deployment can be considered production ready (hence the “beta” tag). That said, we are already using it to store some research data and the aspects which we’ve managed to get working are working well. We’re going easy though, because CKAN 1.8 and 2.0 are apparently due to land in the next couple of months.

Orbital Bridge

Our awesomely named Orbital Bridge will serve as the central point for all RDM activity around a project, as well as helping people through the process of general project management by being a springboard to our existing policy and training documentation.

Currently Bridge’s public-facing side is in a very basic state, with only static content, but is serving as a test of our deployment toolchain. However, behind the scenes Harry has been working on ways of shuffling data around between systems using abstraction layers for aspects such as datasets, files, people and projects. Today we sat down with Paul and went through some aspects of minimal metadata which are required to construct things to an acceptable standard, which will lead to additional work both on CKAN and our existing ePrints repository to smooth the transfer of things between them.

AMS

The University’s new Awards Management System is designed to help researchers plan their funded research, walking them through the process of building their bid. The system itself has begun its roll-out across the University, and as soon as we’re given access to the APIs we’ll be integrating the AMS with Orbital Bridge, allowing seamless creation of a research project based on the data in the AMS.

This work also helps to inform stuff we’re doing in Bridge around abstracting the notion of a ‘project’ between all our different systems.

Kumo

Our ongoing OpenStack project, which we will use as the bed to provide the technical infrastructure, is slowly moving closer to a state which we can begin to develop on. Tied in with this effort is our continued work on automating our provisioning, configuring, deployment, maintenance, monitoring and scaling.