One of the cool things about Orbital from my point of view is that I’m not just responsible for putting together a bit of software that runs on a web server, but also for designing the reference platform which you run those bits of software on.

At this point I could digress into discussing exactly what boxes we’re running Orbital on top of, but that doesn’t really matter. What is more interesting is how the various servers click together into building the complete Orbital platform, and how those servers can help us scale and provide a resilient service.

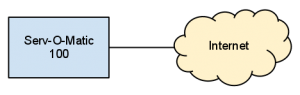

You’re probably used to thinking of most web applications like this:

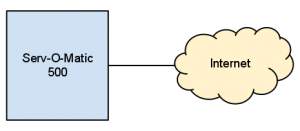

It’s simple. You install what you need to run your application on a server, hook it up to the internet, and off you go. Everything is contained on a single box which gives you epic simplicity benefits and is often a lot more cost efficient, but you lose scalability. If one day your application has a traffic spike your Serv-O-Matic 100 may not be able to cope. The solution is to make your server bigger!

This is all well and good, until you start to factor in resiliency as well. Your Serv-O-Matic 500 may be sporting 16 processor cores and 96GB of RAM, but it’s only doing it’s job until the OS decides it’s going to fall over, or your network card gives up, or somebody knocks the Big Red Switch.

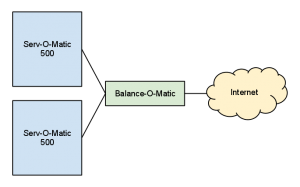

Once you’ve recovered from the embarrassment, it’s time to build resiliency. Generally speaking you’ll do this by adding load balancers. These are generally very specialised bits of hardware – much like network switches – which exist to do one thing and to do it reliably. Your load balancer is much less likely to flop over and explode than a server is, and if you want you can get really clever with multiple redundant load balancers. Here’s a simplified look at what’s happening:

At this point you’ve got a resilient platform — should a server fall over it’s not going to completely wipe out your application. Unfortunately for you, however, your application relies on a database and having two distinct copies of the database probably isn’t going to cause too much happiness for users who have filed away their work on one box, only for the load balancer to throw them to the other one. Enter another layer of server-fu, this time your clustered database servers acting as redundant copies of each other.

All going well so far. To scale this model you can now use one of two options: Add beefier servers, or add more servers horizontally. However, depending on the software in question and the underlying database architecture you may run into some problems. The two biggest ones are session affinity (ie how do you make sure that a user session stays with the same server) and scaling SQL servers whilst maintaining ACID compliance without spending an enormous amount of money. This is where Orbital’s magical sauce comes into play — it’s designed to be both stateless at the core level (as in all of the API functions are RESTful and thus represent a complete transaction with no requirement for session affinity) and unreliant on SQL features like transactions, JOINs and referential integrity.

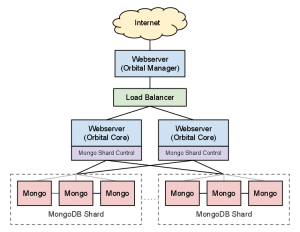

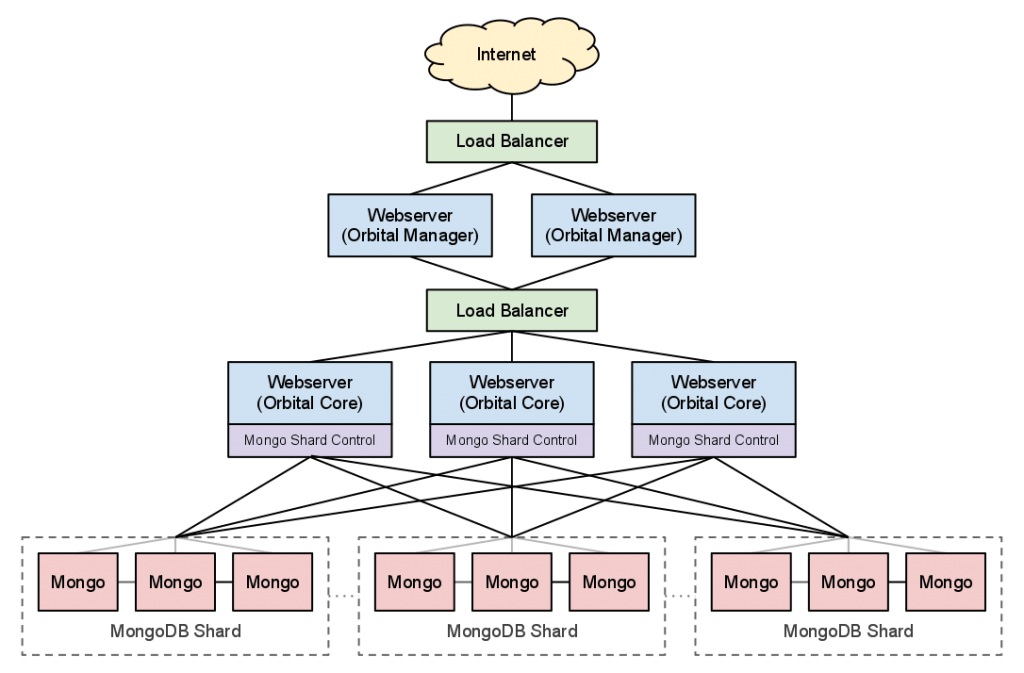

MongoDB provides us with some handy features which can help us dramatically boost performance and resiliency across Orbital, namely sharding (slicing data across multiple servers so a request may be processed by multiple servers in parallel) and replication (keeping multiple identical copies of data on different servers in case one of them explodes). Orbital is also designed to be able to separate the core (as in the bit which actually does the heavy lifting) and the manager (the pretty user interface which most people will be using) across multiple servers without causing stress. The full blown Orbital platform in an ideal world will be something akin to the following:

At this point you’re probably going “wow, that’s a lot of servers!”, and you’d be right. It’s important to remember, however, that those servers are logical and not necessarily physical (or even virtual) and the whole platform is quite flexible. There is nothing stopping you putting Mongo servers on the same installation as web servers, or making do with fewer ‘full’ servers per shard and moving around arbitration servers (which help keep replica sets working) onto different boxes.

On the other hand, there’s nothing stopping you from going all out should the data requirements start to get somewhat more epic. The model above uses two shards, meaning that each dataset is sliced across two locations (and then replicated three times for resiliency). There’s also only one server for the Manager instance, and this is something else that could be load balanced. For backup purposes each shard could include one replica set box located on a physically distant site, or you could handle obscenely high read volumes by expanding replica sets to include even more servers. By stretching things sideways you avoid the need for monolithic single-point-of-failure machines whilst at the same time staying flexible. It’s a lot easier to provision more servers to supplement a platform than it is to provision a larger server and move things onto it.

Comments are closed.