The switch to CKAN was an important decision for the Orbital project and I’d like to think that it will help raise the profile of CKAN within the academic community. We’d been keeping an eye on CKAN development from earlier on in the year, but it was the opportunity to talk to Mark Wainwright, OKFN Community Co-ordinator, at the Open Repositories 2012 conference that prompted us to really look at the potential of using CKAN as part of Lincoln’s Research Data Management infrastructure. Mark’s OR2012 poster (PDF) provides an nice overview of what CKAN currently offers.

Before I go into more detail about why we think CKAN is suitable for academia, here are some of the feature highlights that we like:

- Data entry via web UI, APIs or spreadsheet import

- versioned metadata

- configurable user roles and permissions

- data previewing/visualisation

- user extensible metadata fields

- a license picker

- quality assurance indicator

- organisations, tags, collections, groups

- unique IDs and cool URIs

- comprehensive search features

- geospacial features

- social: comments, feeds, notifications, sharing, following, activity streams

- data visualisation (tables, graphs, maps, images)

- datastore (‘dynamic data’) + file store + catalogue

- extensible through over 60 extensions and a rich API for all core features

- can harvest metadata and is harvestable, too

You can take a tour or demo CKAN to get a better idea of its current features. The demo site is running the new/next UI design, too, which looks great.

CKAN’s impact

In its five years of development, CKAN has achieved significant impact across the world. Despite web scale open data publishing being a relatively recent initiaitve, CKAN, through the efforts of OKFN, is the defacto standard for the publishing of open data with over 40+ instances running around the world. How do the UK, Dutch, Norweigan and Brazilian governments make their data publicly accessible? The European Commission? They use CKAN.

On the flip side, CKAN has attracted significant interest from developers with 53 code contributors over 5 years and 60+ extensions.

Major CKAN changes since Orbital project began

When we first bid for the JISC MRD programme funding, CKAN was a less attractive offering to us. Our bid focused on an approach we’ve taken on a number of projects, using MongoDB as a datastore over which we built an application that adds/edits/reads data via a set of APIs we would write. Our bid also focused on security and the confidentiality of commercial engineering data. Since starting the Orbital project these concerns have been addressed or are being addressed by CKAN and the requested features we’ve identified through our engagement with researchers have also been integrated into CKAN, such as activity streams and data visualisation. Reading through the CKAN changelog shows just how much work is going into CKAN and with each release it’s developing into a better tool for RDM. Here are some of the headline features, in order of priority, that have turned our attention to CKAN over the course of the Orbital project.

CKAN in an academic environment

We’ve discussed the idea of a Minimum Viable Product for RDM, and consider it to be authentication, data storage, hosting/publishing, licensing, a persistent URI and analytics. These features alone allow an academic to reliably and permanently publish data to support their research findings and help measure its impact. CKAN meets these requirements ‘out of the box’. Other requirements of a tool for managing research data include the following (you can add more in the comment box – these are based on our own discussions with researchers and a quick scan of other JISC MRD projects)

- Integration with the institutional research environment (e.g. hooks into CRIS system, Institutional Repository, DMPOnline, networked storage)

- Capturing the research process/context/activity; notation, not just data

- Controlled access to non-Lincoln staff e.g. research partners

- Good, comprehensive search tools

- Version control for data and metadata

- Customisable, extensible meatadata

- Adherence to data standards e.g. RDF

- Multi-level access policies

- Secure, backed up, scalable file storage for anywhere access to files and file sharing (e.g. Dropbox)

- Command-line tools and good web UI for deposit/update of data

- Permanent URIs for citation e.g. DOIs

- Import/export of common data formats

- Linking datasets (by project, type, research output, person, etc.)

- Rights/license management

- Commercial support/widely used, popular platform (‘community’)

RDM features that are currently lacking in CKAN

During our meeting with OKFN staff last month we identified several areas that need addressing for CKAN to meet our wider requirements for RDM. These are:

- Security: CKAN is not lacking in security measures, but we need to look at CKAN’s security model more closely (roles, permissions, access, authentication) and also tie it into the university’s Single Sign On environment

- ‘Projects’ concept: We think that the new ‘organisations‘ feature might work conceptually in the same way as this.

- Academic terminology + documentation for academic use: We need to review CKAN and write documentation for an academic use case as well as provide a modified language file that ‘translates’ certain terminology into that more appropriate for the academic context.

- Batch edit/upload controls. Certain batch functions are available on the command line, but out of the box, there’s no way to upload and batch edit multiple files, for example.

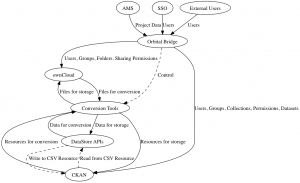

- ownCloud integration: CKAN doesn’t provide the network drive storage that researchers (actually pretty much everyone) relies on to organise their files. Increasingly people are using Dropbox because of the synchonisation and sharing features. These are important to researchers, too, and moving data from such a drive to CKAN will be key to researchers adopting it.

- EPrints integration (SWORD2): A way to create a record of CKAN data in EPrints, thereby joining research outputs with research data.

It’s these features that we’ll be concentrating on in our development on the Orbital project.

Harry and I are attending the Open Knowledge Festival in Helsinki later this month and will talk more about our choice of CKAN for research data. I’d be interested to hear from anyone working in a university who has looked at CKAN in detail and decided against using it for RDM. It seems odd to me that it has such a low profile in academia (or maybe I’m just clueless??) and I do think that the time has come to embrace CKAN and acknowledge the efforts of OKFN more widely. I know there are people like Peter Murray Rust and Mark MacGillivray, who are actively trying to do this and OKFN’s presence at Dev8D and OR2012 this year demonstrates its eagerness to work more closely with the university sector. Perhaps we’re near a tipping point?

As part of this strand of the project (which cuts across workpackages 7, 11, and 12), I want to consider the following:

As part of this strand of the project (which cuts across workpackages 7, 11, and 12), I want to consider the following: