Last week Joss and I had a chat with one of our engineering researchers about the kinds of data he handles in his research. This was an incredibly useful meeting, leading to a whole bunch of notes on data types, requirements and workflows. The one I’m taking a look at today is the flow that data takes from its source, through storage and processing, and into a useful research conclusion.

The existing workflow looks something like that shown above. Source data is manually transferred (often using ‘in-the-clear’ methods) from its point of origin to local storage on a researcher’s machine, where it will reside on the hard disk until it’s used. From there the data is processed (Engineering love using MATLAB, as do a lot of other science disciplines, so that’s the example here) and potentially the results of that analysis are recombined with the local storage for further work. At some point the processing will arrive at conclusions for the data, and from those an output can be drawn.

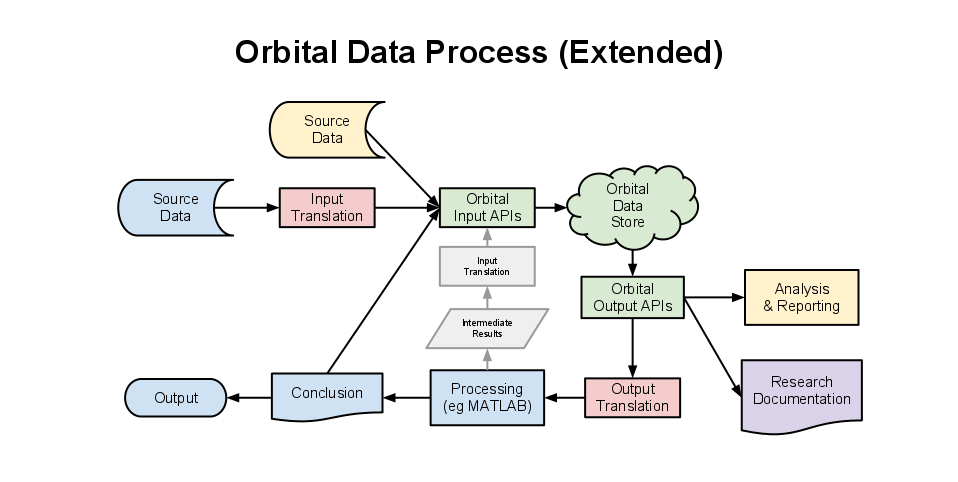

Orbital, as you can see from the diagram below, is something that replaces the ‘store it on the HDD’ bit of the process.

Your initial reaction will probably be something along the lines of “Wait, that’s more complicated! Orbital is supposed to simplify my life!”, and you’d be right. Moving to the (very sketchy and just one possibility) proposed architecture means that extra work is necessary to get data into and out of Orbital’s data store in a way which is usable. This is something we acknowledge that researchers aren’t necessarily going to be fans of, so we’ll be trying to add as many obscenely easy ways of working with Orbital as we can.

Hopefully the entire Orbital platform (backup, integrity, management etc) will make it worth this extra effort, but if not then we’ve got another diagram to explain why the Orbital method is actually a much nicer way of doing things in the long run.

Here you can see the Orbital system at play for a larger, more interconnected research project. Two data sources input separately to the project’s data store, and conclusions as well as intermediary results can all be stored right alongside the originals. From this, analysis and reporting tools can talk directly to the data store without needing to involve intermediary steps, meaning that there is less time spent turning one format into another and more time spent crunching numbers and working out what they mean. Since the Orbital APIs let you request data in all kinds of formats it’s easier to look at it from different angles, again reducing the amount of time you spend crafting arcane SQL queries and allowing you to get on with the important bit of actually studying data.

We’ve got some more meetings planned for the future to gather some more requirements, but we’re now starting to get to grips with what we think people are wanting from the system. Don’t forget that if you want to check out some of our ongoing analysis of what people want you can always have a peek at our Pivotal Tracker.

Comments are closed.