On the 18th February, we ran a workshop in London which focused on the use of CKAN for research data management. The Orbital project made the decision to use CKAN last summer and was soon followed by Bristol’s data.bris project, which is using CKAN for its discovery catalogue. Simon Price from Bristol, gave a very interesting presentation of their work with CKAN, which you can read about on their project blog.

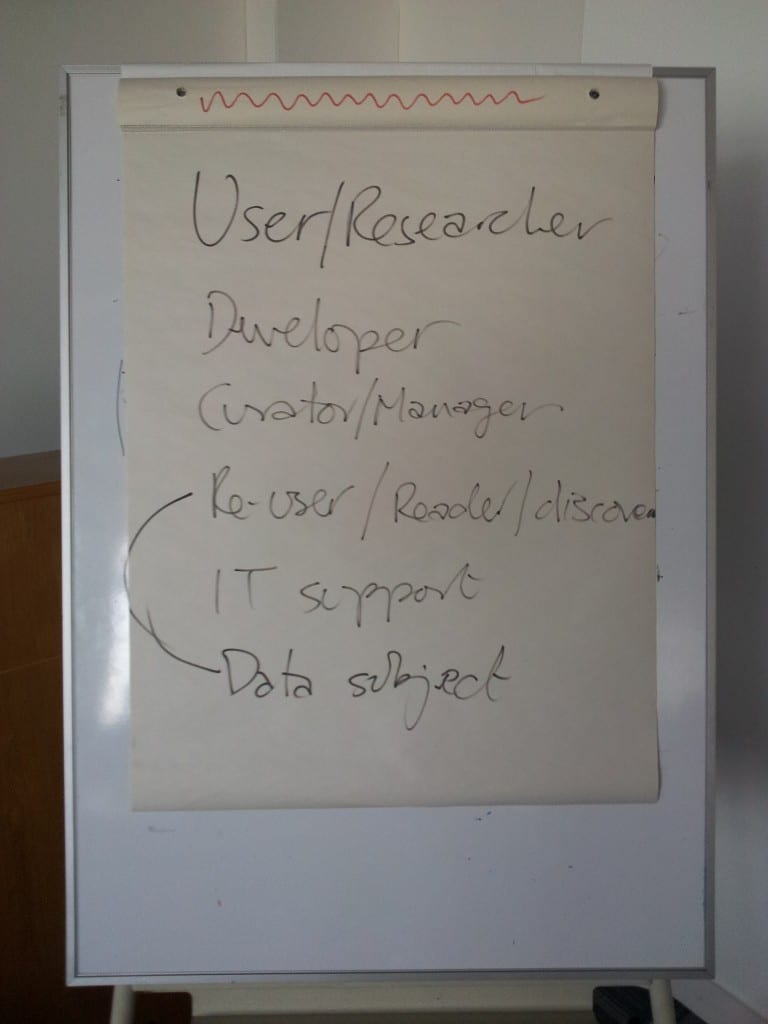

The #CKAN4RDM workshop was fully booked with 40 delegates attending – many more than we originally anticipated. It was facilitated by Simon Hodson, the Programme Manager of JISC’s Managing Research Data programme. Following presentations from Lincoln and Bristol on our respective uses of CKAN (ours was a live demo of ‘Orbital Bridge‘), we spent the later part of the morning undertaking a requirements gathering exercise, where tables of around 8-10 people acted as different users, providing ‘stories’ (requirements) for a research data management system. The exercise was introduced in the following few slides.

This was a useful exercise regardless of the software used, but after collating all 70+ stories over lunch, we then returned to our user groups and each table worked with a CKAN expert from the Open Knowledge Foundation to discuss the existing constraints for each requirement and started to develop a gap analysis so as to identify work to be done. The output of this work can be viewed on Google docs.

There was quite a positive buzz about the day and general feedback suggested that delegates got a lot out of the event. You can read write ups from the DCC, LSE and the Datapool project at Southampton.

One of the original purposes of the workshop was research for a conference paper that I (Joss) am giving at the IASSIST conference in Cologne, in May. The abstract I submitted to the conference was as follows:

This paper offers a full and critical evaluation of the open source CKAN software <http://ckan.org> for use as a Research Data Management (RDM) tool within a university environment. It presents a case study of CKAN’s implementation and use at the University of Lincoln, UK, and highlights its strengths and current weaknesses as an institutional Research Data Management tool. The author draws on his prior experience of implementing a mixed media Digital Asset Management system (DAM), Institutional Repository (IR) and institutional Web Content Management System (CMS), to offer an outline proposal for how CKAN can be used effectively for data analysis, storage and publishing in academia. This will be of interest to researchers, data librarians, and developers, who are responsible for the implementation of institutional RDM infrastructure. This paper is presented as part of the dissemination activities of the JISC-funded Orbital project <https://orbital.blogs.lincoln.ac.uk>.

As well as using last week’s outputs of the CKAN4RDM workshop, I’ll also be working closely with OKF staff to ensure that the evaluation is as thorough, accurate and up-to-date as possible by the time of the conference. It will focus on version 2.0 of CKAN, which is due for release soon.

I’d also like to appeal to other JISC MRD projects to send me any existing requirements documents you have produced during the course of your project. I will use the anonymised data to enrich the requirements we gathered last week. If you have such documents, please email me.

Finally, we have set up a CKAN4RDM mailing list, which anyone is welcome to join to discuss the use of CKAN within academia. One thing is clear to me: the academic community cannot expect OKF and existing CKAN developers to meet all of our requirements for research data management. We need to contribute developer time and other resource and effort to the overall CKAN open source project, just as other public sector organisations are doing.