In December, colleagues in the Web Team (who manage the corporate web site in the Department of Marketing and Communications) approached a few of us about building a tool to allow staff to edit their profile for the new version of the lincoln.ac.uk website. We suggested that much of the work was already done and it just needed gluing together. Yesterday we met with the Web Team again to tell them that our part of the work is pretty much complete. Here’s how it works.

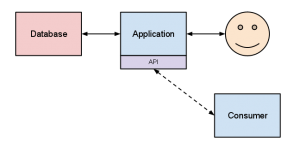

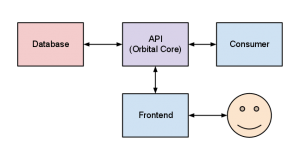

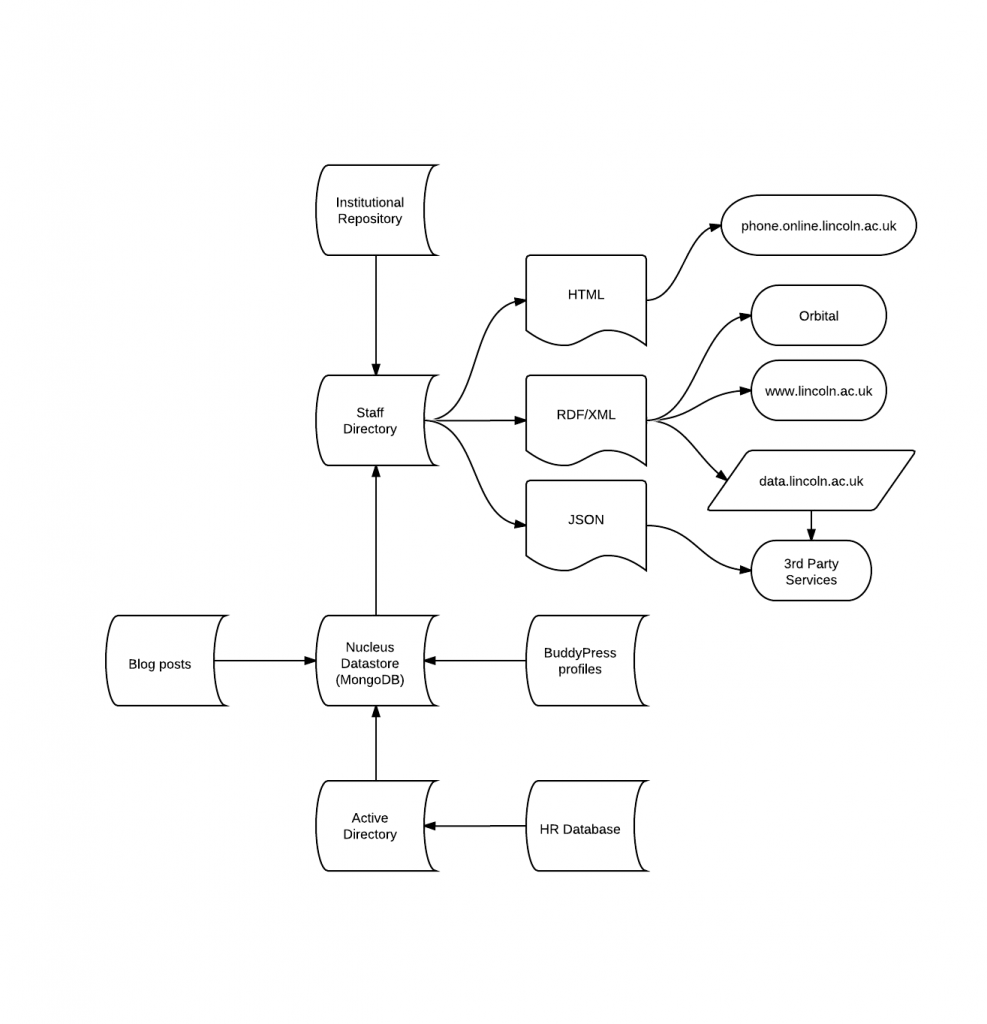

This requires a bit of explanation, but let me tell you, it’s the holy grail as far as I’m concerned and having this in place brings benefits to Orbital and any other new application we might develop. Here’s a clearer rendering.

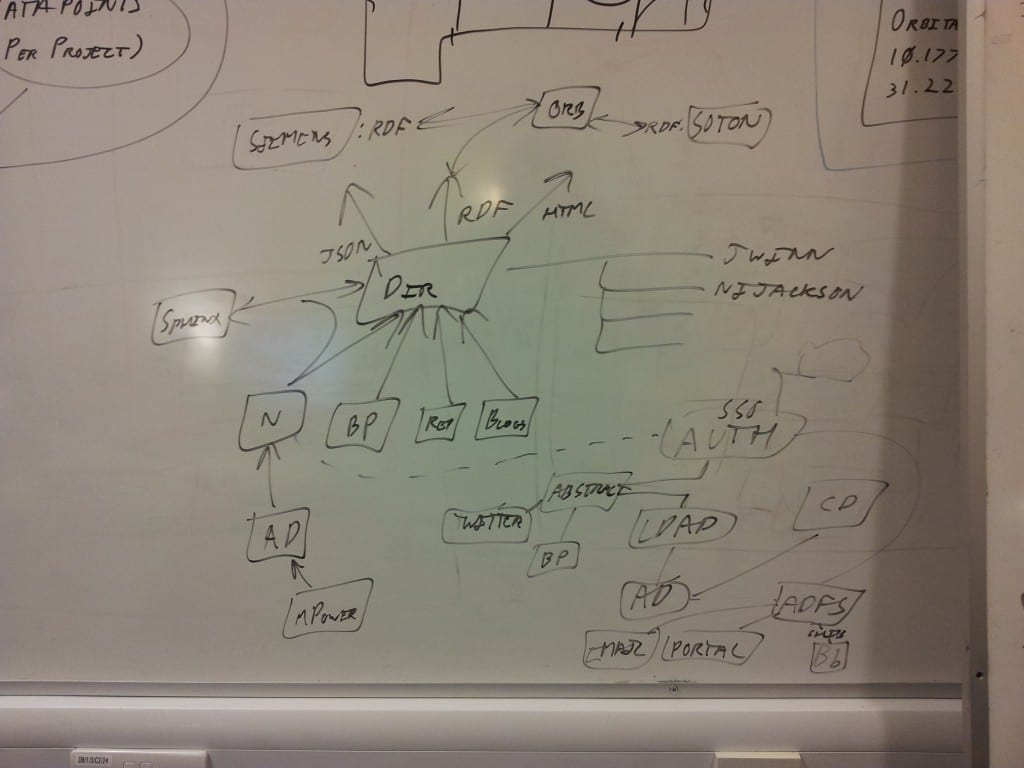

The chart above strips out the stuff around authentication that you see in the bottom right of the whiteboard photo. That’s for another post – something Alex is better placed to write.

Information about staff at the university starts with the HR database. This feeds the Active Directory, which authenticates people against different web services. Last year, Nick and Alex pulled this data into Nucleus, our MongoDB datastore, and with it built a new, slick staff directory. Then they started bolting things on to it, like research outputs from the repository and blog posts from our WordPress/BuddyPress platform. To illustrate what was possible, they started pulling information from my BuddyPress profile, which I could edit anytime I wanted to. It got to the point where I started using my staff directory link in my email signature because it offered the most comprehensive profile of me anywhere on a Lincoln website.

By the time we first met with the Web Team about the possibility of helping them with staff profiles, Alex and Nick had 80% of the work already done. What remained was to create a richer number of required fields in BuddyPress for staff to edit about themselves and a scheduled XML dump for the Web Team to wrangle into their new templates on www.lincoln.ac.uk.

So the work is nearly done. The XML file is RDF Linked Data, which means that we have a rich aggregation of staff information and some simple relationships, feeding the Staff Directory, being refreshed every three hours and then being output either as HTML, JSON or RDF/XML.

For the Orbital project, all this glue is invaluable. When staff login to Orbital (Nick’s working on this part right now), we’ll already know who they are, which department they work in, what research outputs they’ve deposited in the institutional repository, what their research interests are, what projects they’re working on, the research groups they’re members of, their recent awards and grants, and the keywords they’ve chosen to tag their profile with. It’s our intention that with some simple AI, we’ll be able to make Orbital a space where Researchers find themselves in an environment which already knows quite a bit about their work and the context of the research they’re undertaking. Once Orbital starts collecting specific staff data of its own, it can feed that back into Nucleus, too.

This reminds me of our discussion last month with Mansur Darlington of the ERIM/REDm-MED project. Mansur stressed the importance of gathering data about the context of the research itself, emphasising that without context, research data becomes increasingly meaningless over time. Having rich user profiles in Orbital and ensuring that we record data about the Researcher’s activity while using Orbital, should help provide that context to the research data itself.

Orbital, therefore, becomes an infrastructure not only for storing and managing research data, but also a system for storing and managing data about the research itself.